Project Description

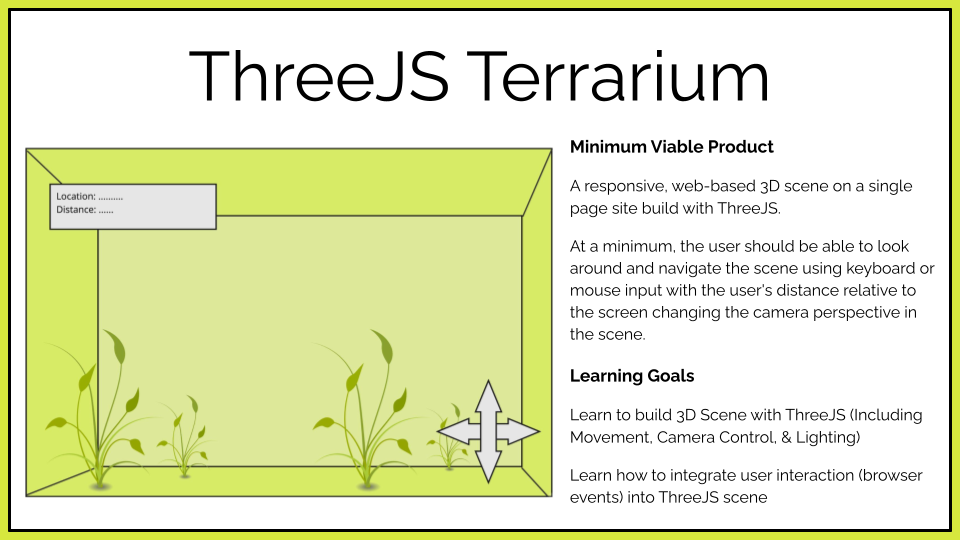

A coding project I completed over the course of 3 weeks, ThreeJS “Terrarium” is an experiment in hands-free 3D navigation.

It is intended to serve as a proof-of-concept for ML face tracking as an intuitive camera controller for 3D environments. Potential applications include hands-free CAD orbit controls and game navigation.

Click here for Live Demo

Warning: expect initial loading to take a while; demo contains a number of large, un-optimized assets (as this was my first web project, loading speed was something I did not take into consideration).

General Interface and Object Manipulation Demo

This demo is built using the Webgazer Library for facial tracking, the KalmanJS library for camera stabilization and responsiveness, and ThreeJS + R3F libraries for 3D scene creation.

Support Technologies include: Blender, Photoshop, & gltfjsx

Navigation Modes & Keyboard Controls

Controller currently responds accurately to L/R, Up/Down & FWD/BWD head movements. These are used to control Panning, Zoom, and Field of View. Eyegaze predictions from Webgazer are currently too inaccurate to be used for mouse-less raytracing.

Camera control modes

- Built-In R3F manual Orbit Controller (Click & Drag / Scroll to Zoom)

- Custom Inspection Mode (Fixed focus, Face-driven position & zoom)

- Custom Navigation Mode (Fixed position, Face-driven rotation)

ThreeJS Scene features

- Rendering 3D models, Environment, lighting, etc.

- Animation, physics, click-event & keyboard responses

UI & Controls

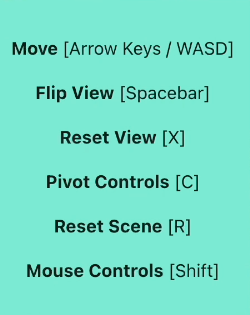

Keyboard Controls

- Click to focus on object

- Arrow Keys/WASD to move

- Spacebar to flip view 180deg

- Shift to switch to mouse control

- X to reset to default view

- C to enable pivot controls

- R to reset scene

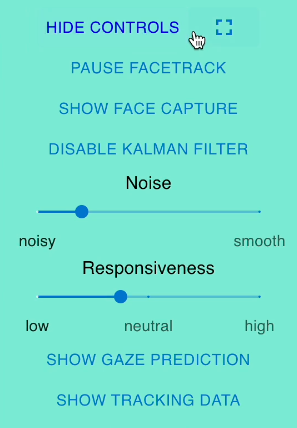

Panel Controls

- Full-screen mode

- Pause/start face tracking

- Show & reset face tracking

- Image stabilization filter settings

- Show/hide gaze tracking

- Show/hide normalized sensor data

- View Mode Button (Inspect vs Navigate)

Project Background

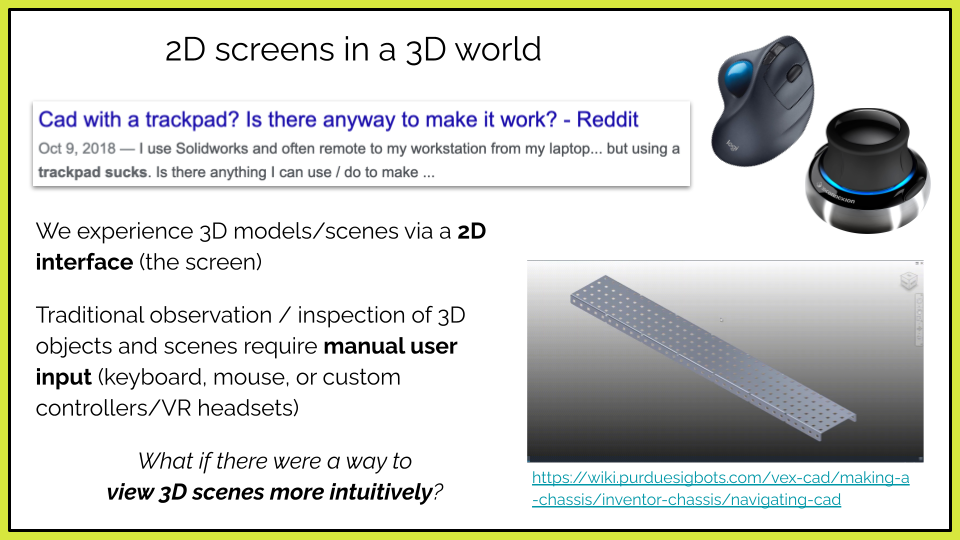

I decided to tackle an interface problem that I have personally dealt with in the past, and that is model manipulation and navigation in 3D scenes.

These days, we are able to accurately simulate and manipulate 3D environments digitally through video game engines or CAD software, but we still interact with them through a 2D screen, so can only see one perspective at a time.

In 3D modeling, you are often using manual orbit controls, to gain perspective on what you are working on. This often interrupts the editing workflow and is less intuitive than real-world observation.

So I wondered if there was a more intuitive way to control view navigation than current offerings…one that didn’t require additional hardware.

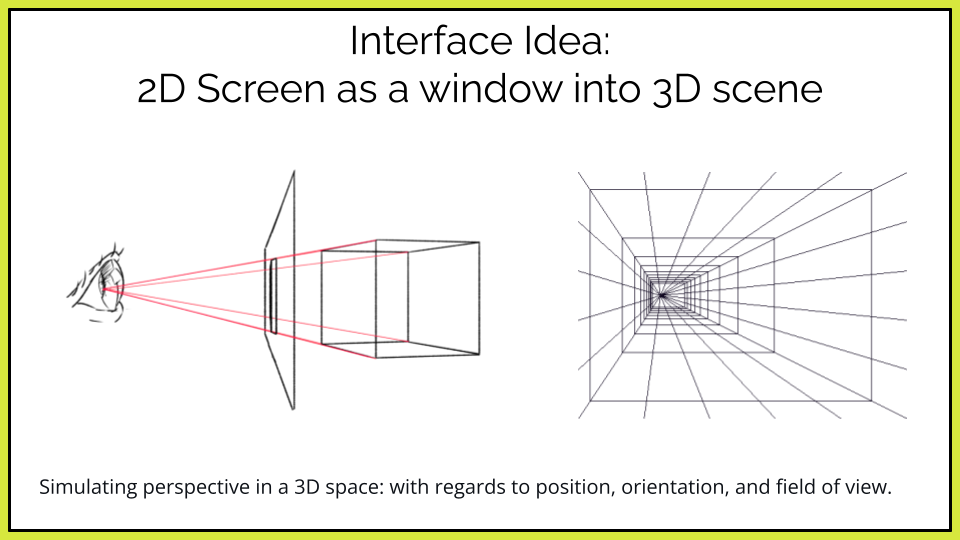

I decided to the most intuitive interface would be to treat the screen a bit like a window – and have the 3D scene shift and zoom according to the user’s head and eye movements. This would allow the user to look around an object in a very organic way, if I could figure out how to actually track where the user was.

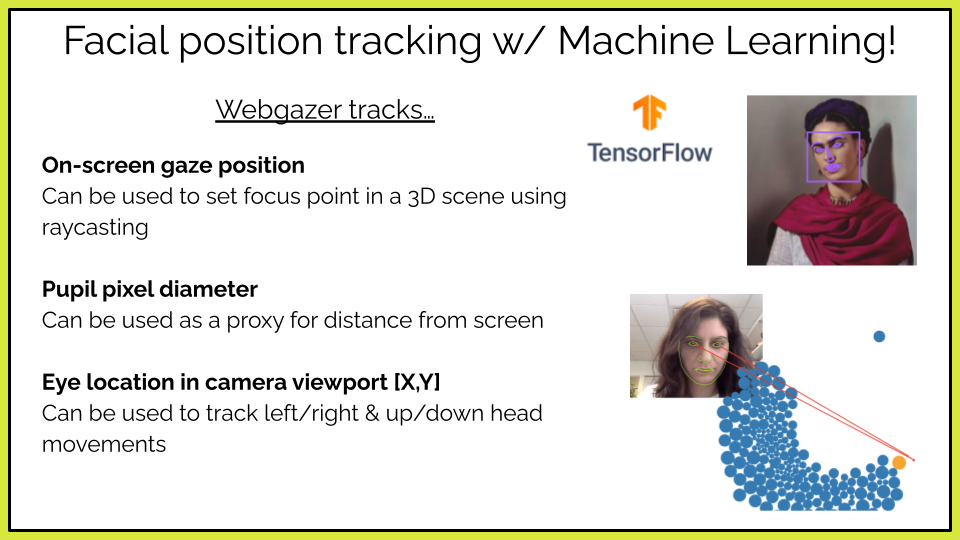

Enter Machine Learning!

I did some research and found a number of open-source, webcam-based facial tracking libraries that used TensorFlow.js, a ML library written in Javascript. After getting a simple demo working on my local machine, I settled on the Webgazer library, as it seemed to provide all the data I needed to track a user’s face position.

Now that I had a way to capture facial data, I began to develop a hands-free camera control system and a 3D environment to demonstrate its functionality.

To keep my project browser based, I decided to use ThreeJS, a javascript library for building WebGL scenes, as the core technology for my environment and navigation system.

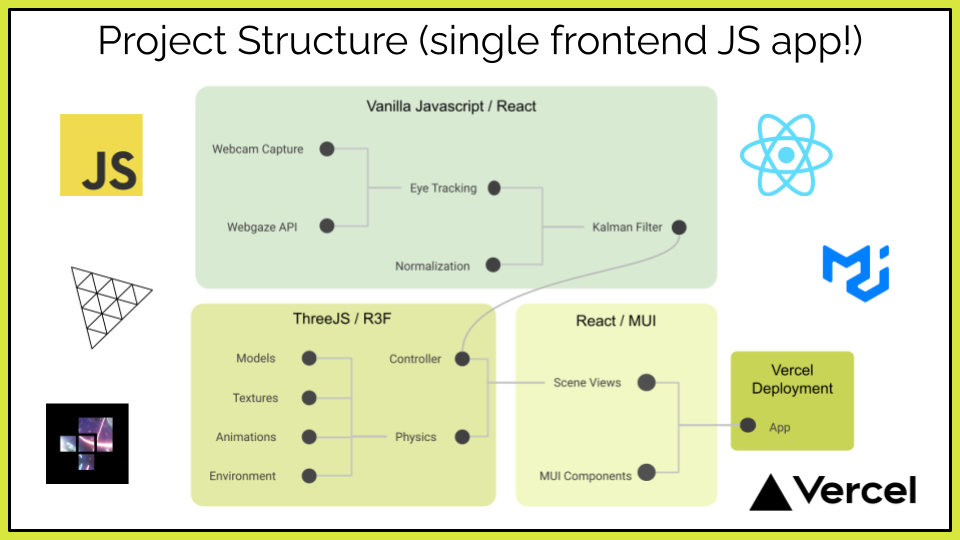

Here is a high-level overview of my project structure and the technologies that I used.

- I wrote components to capture the raw face position data from Webgazer, normalize that data, and filter it through a control algorithm for image stabilization.

- That control data was then sent to my ThreeJS controller and scene components which used that data to update the camera views in the running scene.

- I also had UI components for the user to update and change aspects of the camera control method and filter in real-time.

As you can see, all my code and the libraries I used are javascript-based, so my entire project fit into a single frontend app that does all input processing and rendering client-side.

Future Improvements

All in all, I am happy with what I was able to achieve with my project in the past three weeks, if i were to continue, here are some future improvements I would make:

Realistic:

- Build lighter-weight scene to serve as demo / experiment environment

- Learn more about control and navigation methods in ThreeJS (Quaternions…)

- Create controller limits & Improve navigation mode

- Create more control options and filter setting presets for various camera modes

- Integrate webgazer library into site source code rather than external reference.

Reach:

- Create custom version of webgazer library better fit for project’s needs

- Consolidate code into a single addon library separate from the demo scene.